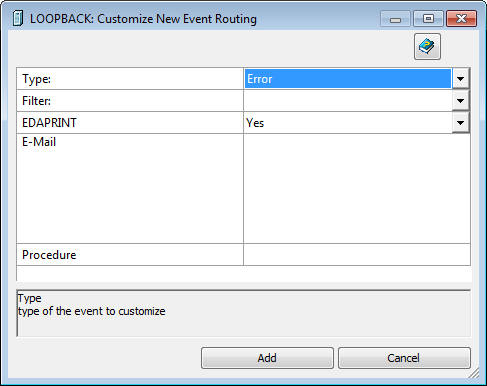

The DataMigrator server can be configured so that if there is an error writing to the ETL log, one or more of the following events will occur:

- A specified procedure is executed that will backup and recreate ETL log and statistics.

- The message is written in to the server log (edaprint.log).

- The email notifications are sent to a named address with a specified frequency.

The following procedure shows how to backup and recreate ETL log and statistics.

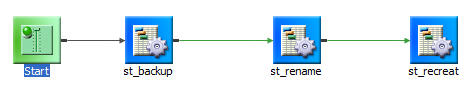

- Create a new process flow and name it pf_backup_recreate.

-

Add the following stored procedures to the process flow

in this exact order: st_backup, st_rename, and st_recreate.

The following is an explanation of the stored procedures mentioned.

- st_backup

-

This procedure will create the etllogbk.foc and etlstabk.foc files in the \dm\catalog\ directory. These files are copies of the etllog.foc and etlstats.foc files. Example code for st_backup is:

SHH CRTBACKUP END

- st_rename

-

This step is optional. This procedure prevents these files from being overwritten during the next backup process. As a result of this procedure, the etllogbk.foc and etlstabk.foc files will be renamed, with a timestamp added to their suffix. For example, etllogbk_20101026_1120258.foc. Example code for st_rename is:

-SET &LOGBK = c:\ibi\srv77\dm\catalog\etllogbk.foc; -SET &STABK = c:\ibi\srv77\dm\catalog\etlstabk.foc; -SET &LOGBKREN = etllogbk || '_ ' || &YYMD || '_ ' || EDIT(&TOD,'99$99$99') || '.foc'; -SET &STABKREN= etlstabk || '_ ' || &YYMD || '_ ' || EDIT(&TOD,'99$99$99') || '.foc'; !REN &LOGBK &LOGBKREN !REN &STABK &STABKREN END

- st_recreate

-

This procedure deletes the original etllog.foc and etlstats.foc files, and will replace them with the new initialized files. Example code for st_recreate is:

EX ETLCRTLS ALL, Y, N END

The process flow should look like the following image.

- Run the new process flow by clicking Run or Submit one time, or scheduling the flow. You can also use Event Routing, as described in How to Send an Email Message When There Is an Error Writing to the ETL Log.